如想要在 Kubernetes 中的部署高可用的集群监控系统,一种比较简单的方式是使用 kube-prometheus 项目。

该项目基于 Prometheus Operator 构建,包含 Prometheus、Alertmanager、Node Exporter、Blackbox Exporter、Grafana……等组件,并已预配置为从所有 Kubernetes 组件收集指标,还提供了一组默认的监控面板和告警规则,基本上可以做到开箱即用,减少了许多非常繁琐的安装配置操作。

1. 环境

| IP | 主机名 | 角色 |

|---|---|---|

| 192.168.50.130 | k8s-control-1 | 控制平面 |

| 192.168.50.135 | k8s-worker-1 | 工作节点1 |

| 192.168.50.136 | k8s-worker-2 | 工作节点2 |

操作系统为 Ubuntu 24.04.3,硬件配置为 4核-8G-200G 虚拟机。

Kubernetes 的版本为 1.34.1,并已部署了 Metrics Server 和 Ingress-nginx。

2. 下载

请根据 Kubernetes 版本下载对应的 kube-prometheus,具体可见:

| kube-prometheus stack | Kubernetes 1.29 | Kubernetes 1.30 | Kubernetes 1.31 | Kubernetes 1.32 | Kubernetes 1.33 | Kubernetes 1.34 |

|---|---|---|---|---|---|---|

release-0.14 | ✔ | ✔ | ✔ | x | x | x |

release-0.15 | x | x | ✔ | ✔ | ✔ | x |

release-0.16 | x | x | ✔ | ✔ | ✔ | ✔ |

main | x | x | x | ✔ | ✔ | ✔ |

我这里的 Kubernetes 版本为 1.34.1,所以下载 0.16版本的 kube-prometheus。

# 下载

wget https://github.com/prometheus-operator/kube-prometheus/archive/refs/tags/v0.16.0.tar.gz

# 解压

tar zxvf kube-prometheus-0.16.0.tar.gz & cd kube-prometheus-0.16.0

3. 安装

kubectl apply --server-side -f manifests/setup

kubectl wait \

--for condition=Established \

--all CustomResourceDefinition \

--namespace=monitoring

kubectl apply -f manifests/

安装过程需拉取多个镜像,时间会较长,我这边差不多花了近二十分钟。

另,由于墙的原因可能无法直接拉取镜像,需自行替换镜像源或使用代理,这里不再赘述。

正确安装后,相关资源列表如下:

$ kubectl get deploy -n monitoring

NAME READY UP-TO-DATE AVAILABLE

blackbox-exporter 1/1 1 1

grafana 1/1 1 1

kube-state-metrics 1/1 1 1

prometheus-adapter 2/2 2 2

prometheus-operator 1/1 1 1

$ kubectl get pods -n monitoring

NAME READY STATUS RESTARTS

alertmanager-main-0 2/2 Running 0

alertmanager-main-1 2/2 Running 0

alertmanager-main-2 2/2 Running 0

blackbox-exporter-947ff4cdb-gqhl8 3/3 Running 0

grafana-65778d656b-d7lm7 1/1 Running 0

kube-state-metrics-bfc8d7df4-dkbtt 3/3 Running 0

node-exporter-64qdf 2/2 Running 0

node-exporter-6z85s 2/2 Running 0

node-exporter-vmgb8 2/2 Running 0

prometheus-adapter-6c5fcc994f-xcl5b 1/1 Running 0

prometheus-adapter-6c5fcc994f-z7g2g 1/1 Running 0

prometheus-k8s-0 2/2 Running 0

prometheus-k8s-1 2/2 Running 0

prometheus-operator-64bd998cdd-v4rpb 2/2 Running 0

$ kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

alertmanager-main ClusterIP 10.101.143.153 <none> 9093/TCP,8080/TCP

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP

blackbox-exporter ClusterIP 10.109.206.113 <none> 9115/TCP,19115/TCP

grafana ClusterIP 10.107.162.202 <none> 3000/TCP

kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP

node-exporter ClusterIP None <none> 9100/TCP

prometheus-adapter ClusterIP 10.97.199.94 <none> 443/TCP

prometheus-k8s ClusterIP 10.107.246.150 <none> 9090/TCP,8080/TCP

prometheus-operated ClusterIP None <none> 9090/TCP

prometheus-operator ClusterIP None <none> 8443/TCP

4. 外部访问

4.1. NodePort

如果想要从外部访问 Prometheus、AlertManager 和 Grafana 的 WebUI:

一种方式是将 prometheus-k8s、grafana、alertmanager-main 这三个服务的网络类型由 ClusterIP 改为 NodePort,具体过程略。

另外,从 kube-prometheus 的 0.11 版本开始增加了 NetworkPolicy,默认仅允许指定的内部组件访问,所以需要删除这些 NetworkPolicy,否则无法通过浏览器打开 WebUI。

kubectl delete -f manifests/grafana-networkPolicy.yaml

kubectl delete -f manifests/prometheus-networkPolicy.yaml

kubectl delete -f manifests/alertmanager-networkPolicy.yaml

4.2. Ingress

从外部访问 WebUI,除了使用 NodePort,更好的方式是使用 Ingress 或 Gateway 。

这里给出 ingress-nginx 的相关配置:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: prometheus-ingress

namespace: monitoring

spec:

ingressClassName: nginx

rules:

- host: kube-grafana.igeeksky.com # grafana 的域名

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: grafana

port:

number: 3000

- host: kube-prometheus.igeeksky.com # prometheus 的域名

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: prometheus-k8s

port:

number: 9090

- host: kube-alertmanager.igeeksky.com # alertmanager 的域名

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: alertmanager-main

port:

number: 9093

注:Prometheus 和 AlertManager 的 WebUI 并没有密码保护,如需直接曝露到外网,请通过 Ingress 配置 whitelist 和 BasicAuth,这里略。

见:https://prometheus-operator.dev/kube-prometheus/kube/exposing-prometheus-alertmanager-grafana-ingress/

见:https://prometheus-operator.dev/docs/platform/exposing-prometheus-and-alertmanager/

如前所述,默认配置中有 NetworkPolicy,只有限定的内部组件才能访问。

如希望通过 ingress-nginx 正常转发外部请求,还需将其添加到 NetworkPolicy 白名单,或删除所有的 NetworkPolicy。

这里以 prometheus-networkPolicy.yaml 为例,添加 ingress → Prometheus 的访问规则。

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 3.5.0

name: prometheus-k8s

namespace: monitoring

spec:

egress:

- {}

ingress:

- from:

- podSelector:

matchLabels:

app.kubernetes.io/name: prometheus

ports:

- port: 9090

protocol: TCP

- port: 8080

protocol: TCP

- from:

- podSelector:

matchLabels:

app.kubernetes.io/name: grafana

- namespaceSelector: # 添加规则:允许来自命名空间为 ingress-nginx 的组件访问 prometheus

matchLabels:

app.kubernetes.io/instance: ingress-nginx

ports:

- port: 9090

protocol: TCP

podSelector:

matchLabels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

policyTypes:

- Egress

- Ingress

其它两个文件比照修改即可,修改完成后,执行命令应用更改:

kubectl apply -f manifests/grafana-networkPolicy.yaml

kubectl apply -f manifests/prometheus-networkPolicy.yaml

kubectl apply -f manifests/alertmanager-networkPolicy.yaml

通过以上配置之后,即可顺利从外部访问 WebUI,其中 Grafana 的用户名密码默认是 admin/admin。

另,经过我的测试,配置了 NetworkPolicy 后访问速度会明显变慢。

如果 Kubernetes 集群已使用防火墙与外部网络做了很好的隔离,而且集群部署的实例是高度可信的, 可以考虑直接删除 NetworkPolicy。

5. 数据持久化

kube-prometheus 部署的集群,Prometheus、AlertManager、Grafana 均默认采用 emptyDir 存储,存在数据丢失风险,建议更改存储方案。

因为 Prometheus 需要较好的写入性能,而且各副本实例会独立采集和管理数据,即各实例之间的数据是相互隔离的,并不太适合使用 NFS 这类网络共享存储方案,所以这里采用了本地存储。

另,如要实现大规模集群的长期数据存储,或有多个 kubernetes 集群需统一采集指标数据,则更推荐使用 Thanos 项目。

创建 StorageClass

# local-storage.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-storage

# 为了简单起见,这里直接使用 no-provisioner,所以后续需要静态方式创建 PV

# 如果想要实现动态供应,可自行安装 rancher.io/local-path

provisioner: kubernetes.io/no-provisioner

# 延迟绑定,配合Pod调度

volumeBindingMode: WaitForFirstConsumer

# 回收策略:保留数据

reclaimPolicy: Retain

应用清单

kubectl apply -f local-storage.yaml

5.1. Prometheus

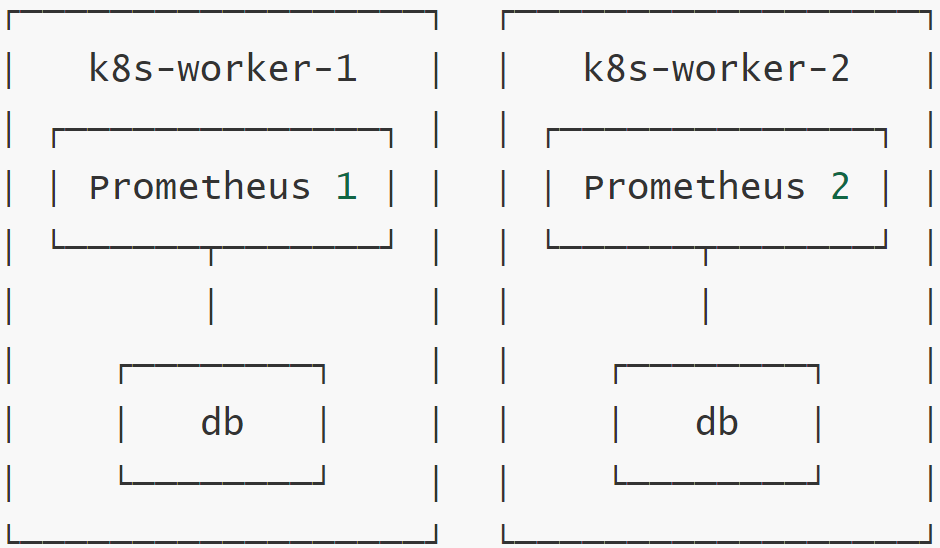

Prometheus 会部署 2 个副本,存储方案需考虑以下几点:

1、每个副本实例的数据是独立采集和管理,所以需创建 2 个数据目录(2 个 PV);

2、每个节点有且仅有一个副本实例,每个节点有且仅有一个数据目录;

3、由于使用本地存储,每个副本实例和其对应的数据目录必须位于同一节点。

创建数据目录

# 分别在 k8s-worker-1 和 k8s-worker-2 上执行

sudo mkdir -p /data/prometheus

# 修改目录属主(组和用户见 prometheus-prometheus.yaml 的 securityContext)

sudo chown -R 1000:2000 /data/prometheus

# 修改权限

sudo chmod -R 775 /data/prometheus

创建 PV

# prometheus-pvs.yaml

# 绑定到k8s-worker-1

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-pv-1

spec:

capacity:

storage: 50Gi

accessModes: [ "ReadWriteOnce" ]

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

# 本地目录

path: /data/prometheus

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

# 指定节点

values: [ "k8s-worker-1" ]

---

# 绑定到 k8s-worker-2

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-pv-2

spec:

capacity:

storage: 50Gi

accessModes: [ "ReadWriteOnce" ]

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

# 本地目录

path: /data/prometheus

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

# 指定节点

values: [ "k8s-worker-2" ]

应用清单

kubectl apply -f prometheus-pvs.yaml

注:Prometheus 采用自定义资源方式部署,默认会自动创建 PVC,因此无需手动创建。

Prometheus 资源清单

# 修改 prometheus-prometheus.yaml (仅新增亲和性、数据保留时间和存储配置)

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 3.5.0

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- apiVersion: v2

name: alertmanager-main

namespace: monitoring

port: web

enableFeatures: []

externalLabels: {}

image: quay.io/prometheus/prometheus:v3.5.0

nodeSelector:

kubernetes.io/os: linux

podMetadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 3.5.0

podMonitorNamespaceSelector: {}

podMonitorSelector: {}

probeNamespaceSelector: {}

probeSelector: {}

replicas: 2

# 新增:数据保留时间

retention: 15d

# 新增:亲和性配置

affinity:

nodeAffinity: # 确保部署到指定的 2 个节点

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-worker-1

- k8s-worker-2

podAntiAffinity: # 确保一个节点仅部署一个副本

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchLabels:

app.kubernetes.io/name: prometheus

topologyKey: "kubernetes.io/hostname"

# 新增:存储配置

storage:

# 使用模板方式创建 PVC

volumeClaimTemplate:

spec:

storageClassName: local-storage

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 50Gi

resources:

requests:

memory: 400Mi

ruleNamespaceSelector: {}

ruleSelector: {}

scrapeConfigNamespaceSelector: {}

scrapeConfigSelector: {}

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: 3.5.0

应用更改

# 如果是修改现有部署,需先删除原有的 statefulset,否则不会变更数据存储

kubectl delete statefulset prometheus-k8s -n monitoring

# 重新应用更改

kubectl apply -f prometheus-prometheus.yaml

5.2. AlertManager

参考一:https://prometheus.io/docs/alerting/latest/high_availability/

参考二:https://groups.google.com/g/prometheus-users/c/867Be7KyUPE

AlertManager 默认会部署 3 个副本,存储数据主要有静默规则(silences)和通知日志(nflog),且数据量非常小。

每个副本的数据均独立存储和管理,副本之间会使用 Gossip 协议进行数据同步。所以在默认情况下(emptyDir),只有当所有副本同时重启才会出现数据丢失的情况。

虽然如此,但为了稳妥起见,也改为使用本地存储。

AlertManager 与 Prometheus 一样都是使用 StatefulSet 部署,因此整体操作几乎完全一致。

创建数据目录

# 分别在 k8s-worker-1 和 k8s-worker-2 上执行

sudo mkdir -p /data/alertmanager

# 修改目录属主(组和用户见 alertmanager-alertmanager.yaml 的 securityContext)

sudo chown -R 1000:2000 /data/alertmanager

# 修改权限

sudo chmod -R 775 /data/alertmanager

创建 PV

# alertmanager-pvs.yaml

# 绑定到k8s-worker-1

apiVersion: v1

kind: PersistentVolume

metadata:

name: alertmanager-pv-1

spec:

capacity:

storage: 2Gi

accessModes: [ "ReadWriteOnce" ]

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

# 本地目录

path: /data/alertmanager

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

# 指定节点

values: [ "k8s-worker-1" ]

---

# 绑定到 k8s-worker-2

apiVersion: v1

kind: PersistentVolume

metadata:

name: alertmanager-pv-1

spec:

capacity:

storage: 2Gi

accessModes: [ "ReadWriteOnce" ]

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

# 本地目录

path: /data/alertmanager

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

# 指定节点

values: [ "k8s-worker-2" ]

应用清单

kubectl apply -f alertmanager-pvs.yaml

注:AlertManager 采用自定义资源方式部署,默认会自动创建 PVC,因此无需手动创建。

AlertManager 资源清单

apiVersion: monitoring.coreos.com/v1

kind: Alertmanager

metadata:

labels:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.28.1

name: main

namespace: monitoring

spec:

image: quay.io/prometheus/alertmanager:v0.28.1

nodeSelector:

kubernetes.io/os: linux

podMetadata:

labels:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.28.1

# 默认情况下,AlertManager 只能部署在 worker 节点

# 因为我当前实验环境只有 2 个工作节点,所以副本数改为 2

replicas: 2

# 新增:亲和性配置

affinity:

nodeAffinity: # 确保部署到指定的 2 个节点

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-worker-1

- k8s-worker-2

# 确保一个节点仅部署一个副本

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchLabels:

app.kubernetes.io/name: alertmanager

topologyKey: "kubernetes.io/hostname"

# 新增:存储配置

storage:

# 使用模板方式创建 PVC

volumeClaimTemplate:

spec:

storageClassName: local-storage

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 2Gi

resources:

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 4m

memory: 100Mi

secrets: []

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: alertmanager-main

version: 0.28.1

应用更改

# 如果是修改现有部署,需先删除原有的 statefulset,否则不会变更数据存储

kubectl delete statefulset alertmanager-main -n monitoring

kubectl apply -f alertmanager-alertmanager.yaml

5.3. Grafana

创建数据目录

Grafana 默认仅部署 1 个副本,所以仅需在节点 k8s-worker-1 上创建数据目录。

# 仅在 k8s-worker-1 上执行

sudo mkdir -p /data/grafana

# 修改目录属主(组和用户见 grafana-deployment.yaml 的 securityContext 段)

sudo chown -R 65534:65534 /data/grafana

sudo chmod -R 775 /data/grafana

创建 PV

# grafana-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: grafana-pv

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes: ["ReadWriteOnce"]

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /data/grafana

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values: ["k8s-worker-1"]

应用清单

kubectl apply -f grafana-pv.yaml

创建 PVC

Grafana 使用 Deployment 方式部署,非自定义资源,不会自动创建 PVC,因此需要手动创建。

# grafana-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-pvc

namespace: monitoring

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: local-storage

resources:

requests:

storage: 10Gi

应用清单

kubectl apply -f grafana-pvc.yaml

Grafana 资源清单

修改 grafana-deployment.yaml

# ……

spec:

template:

spec:

nodeSelector:

# 修改:指定部署节点,与 pv 一致

# kubernetes.io/os: linux

kubernetes.io/hostname: k8s-worker-1

volumes:

# 修改:使用 pvc 替代 emptyDir

- name: grafana-storage

# emptyDir: {}

persistentVolumeClaim:

claimName: grafana-pvc

# ……

应用更改

kubectl apply -f grafana-deployment.yaml